A personal project to create an AI system that remembers, speaks and acts autonomously.

Why I’m Building This

I’m interested in developing AI systems that feel less disposable than typical chatbots. This project is an exploration of real-time cognition, autonomous memory management and voice interaction without relying on prepackaged frameworks.

The goal is to build an architecture that can process, store and act on information independently, while remaining transparent and extensible.

What It Means

Self-hosted AI platform designed to explore:

- Autonomous memory extraction and retention across conversational sessions

- Autonomous long-term knowledge promotion into vector and graph databases for persistent storage and retrieval

- Real-time speech synthesis and transcription with multiple interchangeable engines

- Reflex system that can trigger asynchronous command dispatcher to modify state and execute actions mid-speech

- Task scheduling and execution, including future support for autonomous task creation

Unlike typical chatbot architectures, this system does not rely on isolated conversation threads. It maintains a single continuous cognitive context, enabling consistent behavior and memory across all interactions and topics.

The system is intended as a research-grade implementation of agentic AI capabilities in a controlled environment.

System Overview

The platform currently implements the following capabilities:

-

Autonomous memory extraction

Parses conversational data and identifies relevant information to store.

-

Short-term memory

Maintains a deque of the most recent messages for immediate context during interactions.

-

Hippocampus message buffer

Holds all incoming conversation messages in a temporary buffer. When inactivity is detected, a task is triggered to process the buffer, extract candidate memories, evaluate their relevance, check for graph connections and persist them to the vector and graph databases. Once processed, the buffer is cleared.

-

Context memory

A compact internal monologue maintained autonomously. The system revises, prunes, or clears it as needed to reflect only the most relevant state.

-

Memory promotion pipeline

Evaluates extracted memories and persists selected content to long-term storage with vector and graph representations.

-

TTS/STT pipelines

Generates and transcribes speech in real time using multiple interchangeable engines.

-

Reflex system

Executes asynchronous commands during speech synthesis to modify system state or trigger actions.

-

Task queue

Schedules and manages tasks, with planned support for autonomous task creation.

-

Dynamic model selection

Allows choosing different LLM per request.

-

Voice call integration

Supports bidirectional real-time voice communication initiated by either side.

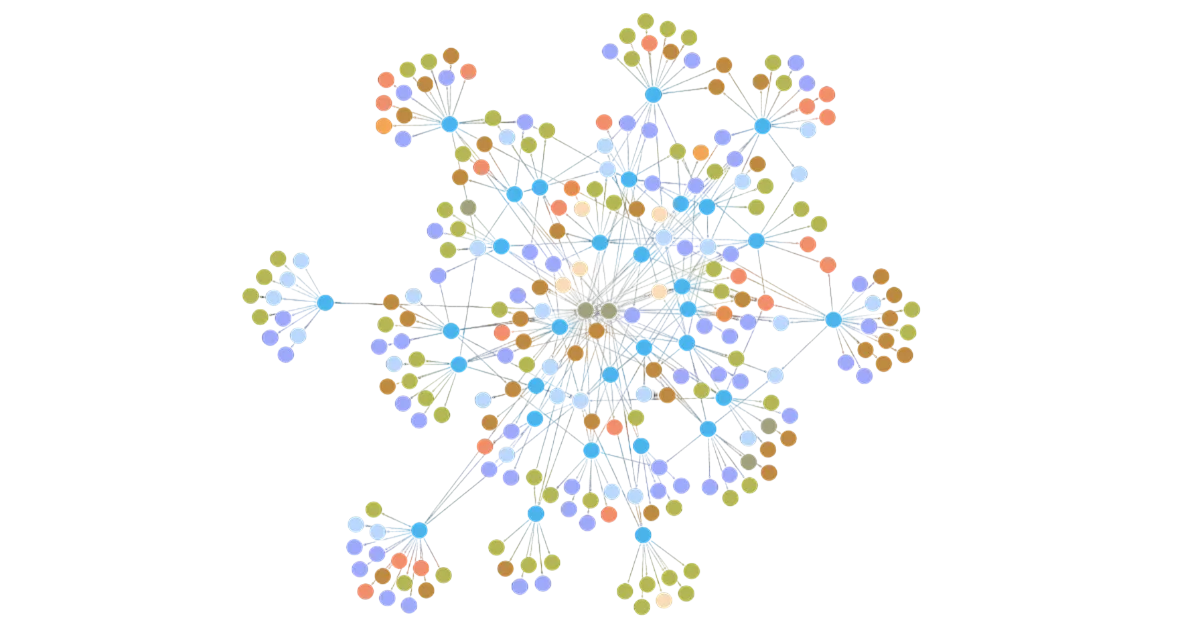

Architecture

The system consists of a frontend interface connected to a FastAPI backend.

Backend coordinates prompting, memory management, speech synthesis, and storage operations.

All components communicate within a single application process to reduce latency and simplify orchestration.

Tech Stack

PythonFastApiUvicornLLMRAGVectorDB:QdrantGraphDB:Neo4jSQLiteWebSocketsDockerSTT:WhisperTTS:CSM-1B,Kokoro,ElevenLabs,FishAudioReact

What Makes It Different

Unlike many contemporary conversational AI implementations, this system does not rely on large orchestration frameworks such as LangChain or pre-integrated pipelines.

Memory extraction, task scheduling, reflex handling, and voice synthesis are all built or customized to fit specific requirements around autonomy, transparency, and real-time operation.

All conversational data and knowledge remain fully self-hosted and under strict control, rather than delegated to third-party platforms claiming to “save humanity” while collecting user data.

The design prioritizes maintaining a single cognitive context over isolated conversation threads, enabling consistent behavior across all topics and sessions.

Challenges & Lessons Learned

-

Microservice complexity

Distributing every component into separate microservices introduced significant orchestration overhead and failure points. Maintaining stable WebSocket connections across services became impractical at scale, and consolidating into a single process improved reliability.

-

Memory extraction design

Implementing autonomous memory extraction is not a solved problem. It required multiple redesigns to handle consistency, duplication deletion, and graph relationships without producing incoherent or conflicting data.

-

Vector and graph database integration

Combining vector similarity search and graph representations introduces consistency and performance trade-offs. Query reliability and deduplication logic needed extensive tuning to avoid unpredictable behaviors.

-

Real-time speech synthesis

Achieving low-latency, chunked TTS output while maintaining natural pacing required more iteration and detail work than anticipated.

-

Autonomous task scheduling

Allowing the system to create and schedule tasks autonomously is appealing in theory. In practice, it demands robust safeguards to avoid runaway task generation or unintended state changes.

-

Context management

Maintaining a single cognitive context across all interactions is more challenging than relying on per-thread sessions. It requires continuous pruning, re-evaluation, and memory promotion strategies to avoid bloating and drift.

What’s Next

-

Autonomous Task Scheduling

Extending the task queue to allow the system to create and prioritize its own tasks without manual input.

-

Memory Promotion Refinement

Improving duplicate detection, graph query reliability and consistency across storage layers.

-

Vector Similarity for Graph Nodes

Adding a dedicated vector database to index node embeddings, enabling similarity search when creating new graph entries. For example, when considering adding a node like “color blue”, the system will retrieve related existing nodes and avoid unnecessary duplicates.

-

Model Context Protocol (MCP) Support

Integrate MCP to enable the platform to securely connect with external tools and data sources in a standardized, model-agnostic way.

Why the Code Isn’t Public and FOSS

The project is developed as a private research platform and currently there are no plans to release the codebase.